- #STEPS TO DOWNLOAD AND INSTALL APACHE SPARK HOW TO#

- #STEPS TO DOWNLOAD AND INSTALL APACHE SPARK WINDOWS#

Master and describe the features of Spark ML programming and GraphX programmingġ. Gain a thorough understanding of Spark streaming featuresħ. Master Structured Query Language (SQL) using SparkSQLĦ. Develop expertise in using Resilient Distributed Datasets (RDD) for creating applications in Sparkĥ. Explain and master the process of installing Spark as a standalone clusterĤ. Understand the fundamentals of the Scala programming language and its featuresģ. Hope you would successfully set up the Apache Spark cluster on your system. In this article, I have presented step by step process to set up Apache Spark 3.1.1 cluster on Ubuntu 16.04. Understand the limitations of MapReduce and the role of Spark in overcoming these limitationsĢ. To stop services of the Apache Spark cluster, run the following command.

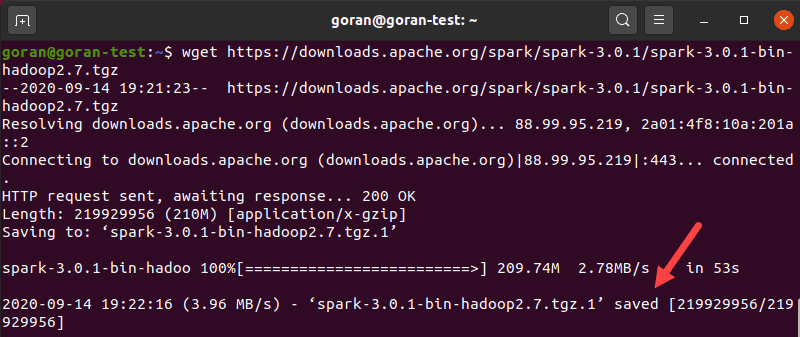

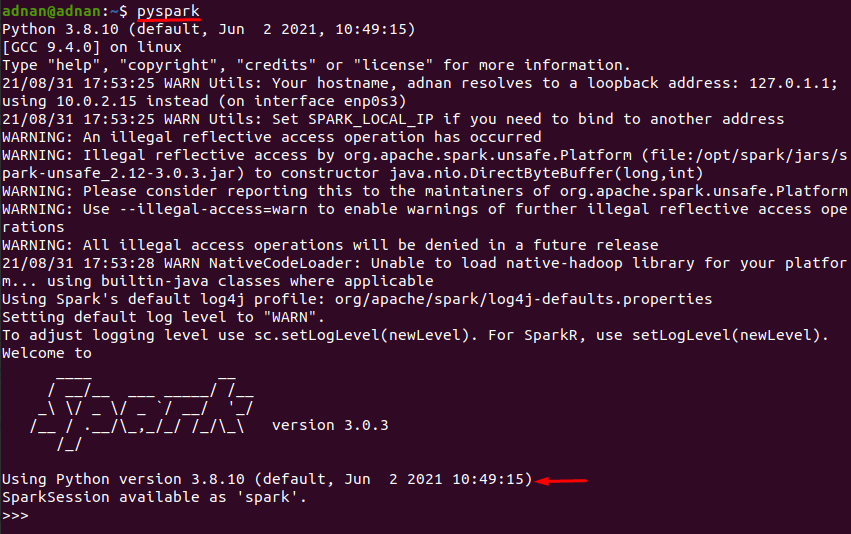

Help you land a Hadoop developer job requiring Apache Spark expertise by giving you a real-life industry project coupled with 30 demosīy completing this Apache Spark and Scala course you will be able to:ġ. Help you master essential Apache and Spark skills, such as Spark Streaming, Spark SQL, machine learning programming, GraphX programming and Shell Scripting Sparkģ. Advance your expertise in the Big Data Hadoop EcosystemĢ. On Spark Download page, select the link Download Spark (point 3) to download. All you need is Spark follow the below steps to install PySpark on windows. so there is no PySpark library to download. Simplilearn’s Apache Spark and Scala certification training are designed to:ġ. PySpark is a Spark library written in Python to run Python applications using Apache Spark capabilities. The course is packed with real-life projects and case studies to be executed in the CloudLab.

The Big Data Hadoop and Spark developer course have been designed to impart an in-depth knowledge of Big Data processing using Hadoop and Spark. What is this Big Data Hadoop training course about? This Scala Certification course will give you vital skillsets and a competitive advantage for an exciting career as a Hadoop Developer. You will master essential skills of the Apache Spark open source framework and the Scala programming language, including Spark Streaming, Spark SQL, machine learning programming, GraphX programming, and Shell Scripting Spark. This Apache Spark and Scala certification training is designed to advance your expertise working with the Big Data Hadoop Ecosystem. #SparkInstallationWindows #HowToInstallSpark #ApacheSparkTutorial #SparkTutorialForBeginners #SimplilearnApacheSpark #Simplilearn To learn more about Spark, subscribe to our YouTube channel:

#STEPS TO DOWNLOAD AND INSTALL APACHE SPARK WINDOWS#

Now, let's get started with installing Spark on windows and get some hands-on experience.

#STEPS TO DOWNLOAD AND INSTALL APACHE SPARK HOW TO#

You will also see how to setup environment variables as part of this installation and finally, you will understand how to run a small demo using scala in Spark. Then you will set up a winutils executable file along with installing Spark. First, you will see how to download the latest release of Spark. This video on Spark installation will let you learn how to install and setup Apache Spark on Windows.

0 kommentar(er)

0 kommentar(er)